So here I’m, with google app engine starting to give me DeadlineExceededException. I don’t deserve it :)! The servlet under investigation normally runs within 1.5s which is quite long I agree, but as the app is not getting any amount of traffic right now, I told myself that I’m not going to be over quota, and will not optimize anything speculatively.

Anyway, my first naive attempt was just to put that Quota service in action to measure better:

1: QuotaService qs = QuotaServiceFactory.getQuotaService();

2: long start = qs.getCpuTimeInMegaCycles();

3: ....

4: long end = qs.getCpuTimeInMegaCycles();

5: double cpuSeconds = qs.convertMegacyclesToCpuSeconds(end - start);

6: resp.setHeader("gaecost", String.valueOf(cpuSeconds));

Need to say, it doesn’t actually work with local jetty server, and what more it shows when in cloud something that is already available in the Log as cpu_ms consumption, etc. It lets you measure with higher granularity, but hardly worth an effort given we have the excellent goodie from google – Appstats!

The official url for it is: http://code.google.com/appengine/docs/java/tools/appstats.html. And good one to read is: http://googleappengine.blogspot.com/2010/03/easy-performance-profiling-with.html

After that installed and running, we can access the profiling page (per what is configured above):

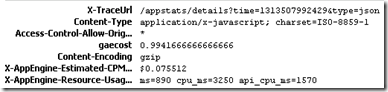

We can see those RunQuery with following datastore_v3.Next causing extra overhead. The profile url for a request can be found either from looking at firebug response headers:

Or when you run locally, in eclipse console:

I first thought that probably I can change the batch size of a fetch and got to this:

Extent<City> extent = pm.getExtent(City.class, false);

extent.getFetchPlan().setFetchSize(FetchPlan.FETCH_SIZE_GREEDY);

But, that didn’t work for me… It was happily doing .Next as it did before. I found then on stackoverflow that it is not supported on gae, dooh! Makes some logic though …

Ok, anyway, I deployed and tried the whole profiling setup in the cloud. Here is what it looked like there:

Hmmm, actually “my” work in blue is only a tiny fraction of those “API” calls to a datastore! I need to get rid of all of those calls at best :).

Memcache or JDO cache? My first stop was Datanucleus cache, here is the url for how to “install”: http://ukena.de/content/enabling-jdo-caching-google-app-engine. And as I’m a complete java novice, I had to read this to make the entities required serializable: http://java.sun.com/developer/technicalArticles/Programming/serialization/

After verifying it works locally, I deployed, and here is the bottom of the profiling graph (all profiling data provided are after first few requests!), as overall there were hundreds of calls to memcache:

With in-response profiling data looking as:

Hmmm, without caching on JDO level I used to have that (again, both after few first requests):

So, it actually got worse! Still there is a question why those .Next are there even when cache is surely in place?!

Next stop then, would be a memcached. Again, not expecting a miracle with that, but here is my target:

- Based onto all that data in datastore I just calculate some expanded stats, a Coverage object with few fields and lists. So I can just cache this target object and this is it, I don’t need granular datastore records to be cached. That is how I expect really to avoid a lot of overhead.

So, here is the updated piece (and I learned the outside api is JCache actually):

Cache cache = null;

List<Coverage> covs = null;

try {

CacheFactory cacheFactory = CacheManager.getInstance()

.getCacheFactory();

cache = cacheFactory.createCache(Collections.emptyMap());

covs = (List<Coverage>) cache.get("coverage");

} catch (CacheException e) {

// ...

}

if (covs != null) {

completeRequest(req, resp, qs, start, out, covs);

return;

}

// ... Initialize coverages from datastore

// When at the end, put coverages into cache and return the response

if (cache != null) {

cache.put("coverage", covs);

}

completeRequest(req, resp, qs, start, out, covs);

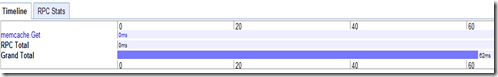

So how it looks now in local profile page?:

As expected! And in the cloud:

This is it! As that “coverage” of mine is only changing rarely (once a day, on a manual batch update basis), my plan for it will be to make it a datastore entity itself, re-calculated once a day. So, memcaching workaround will serve for now.

I should tell I didn’t win on any of the JDO/Datastore optimization points. I really did expect the datastore to be faster though!

At the end – a fresh critic’s view on gae performance and reliability: http://3.14.by/en/read/why-google-appengine-sucks

No comments:

Post a Comment